Due to their different ports, the http:// and https:// versions of your content are considered as two different websites by search engines. In order to prevent duplicate content issues that can impede your SEO, here’s some great techniques for ensuring only one version of your content is cached.

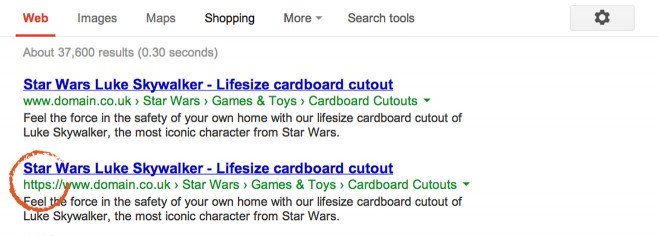

SSL certificates are of course common place, but if you don’t take any measures to prevent both being cached, then you may end up seeing something like this in the SERPS:

This dummy screenshot illustrates how Google can crawl, cache and display a mixture and duplications of both secure and non-secure content. In order to prevent these duplications and/or mixtures, there’s are a few different ways for nominating a single consistent format.

Option 1. The Canonical Link Element

In the first instance, I’d recommend utilising the Canonical Link Element. This handy Link element looks a little like this and is simply added within the Head tags of your site’s pages:

<link rel="canonical" href="http://www.domain.com/star-wars/toys/cut-outs/luke-skywalker.html"/>

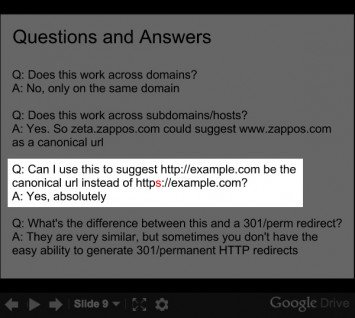

Supported and recommended by Google, Yahoo and Bing (sort of), the Canonical Link Element allows webmasters to declare the preferred or ‘canonical’ location for their content.

So if we refer back to our screenshot example from earlier, applying the Canonical Link Element to our https:// content will ensure that only the http version is used within the search listings. To learn more about the Canonical Link Element, Matt Cutts explains it here back in 2009 when it was introduced.

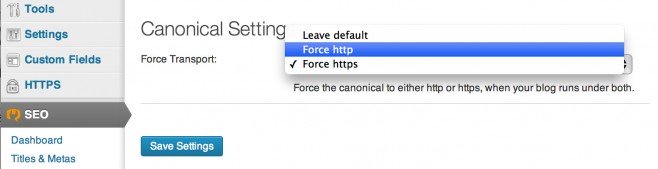

The Canonical Link Element & WordPress

If your website is built using WordPress, then it’s worth noting that Yoast’s fabulous SEO for WordPress plugin provides everything you need to deploy the Canonical Link Element:

Via the ‘Permalinks’ Settings tab you can choose between http and https if you have the website running under both.

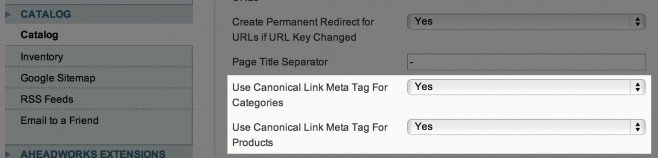

The Canonical Link Element within Magento

Since Magento 1.4 the Canonical Link Element feature is built-in as standard. It was added quite quickly by Magento’s developers to tackle existing duplicate content issues due to products being categorised and accessed via many different URLs.

It’s worth noting however that the canonical tag will only appear on categories and products by default. Despite this, checking both boxes will prevent the key canonicalisation issues faced with secure Magento websites.

Option 2. Serve a different Robots.txt for https

Whilst the Canonical Link Element is the best solution, it isn’t always practical to implement. If your site is bespoke, large or a custom CMS, then implementing a site-wide Canonical tag might be too complicated a task.

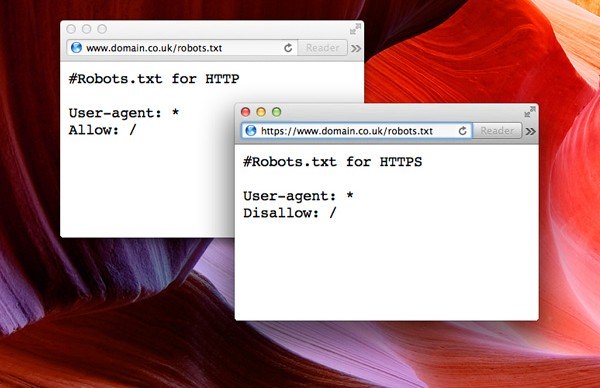

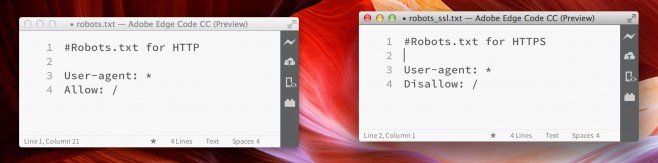

Like I mentioned earlier, the http and https versions of your website are treated as two separate sites by the search engines. Therefore, it is possible to disallow the bots from crawling the http version of your website. To do this, we can use .htaccess to serve two different robots.txt files. One for the secure https site, and one for the regular non-secure http site. Save your regular robots.txt file for the non-secure site as robots.txt, but save your disallowed secure robots.txt file as robots_ssl.txt like in the screenshot below:

So now you should have two different robots.txt files on the root layer of your site. The regular ‘allowed’ version for your non-secure http site, and a second Robots file saved as robots_ssl.txt that will be served for the secure site. Next, apply the following commands to your site’s .htaccess file:

RewriteEngine on

RewriteCond %{SERVER_PORT} ^443$

RewriteRule ^robots\.txt$ robots_ssl.txt [L]

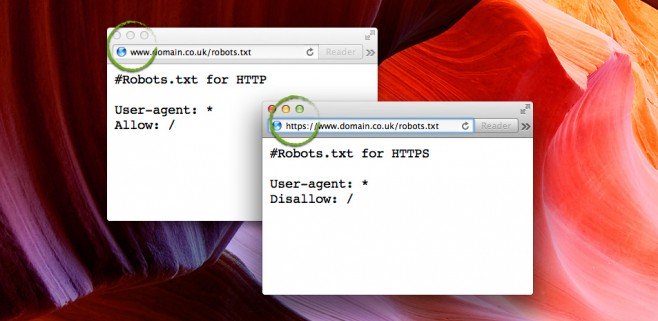

This .htaccess rewrite will essentially serve ‘robots_ssl.txt’ as ‘robots.txt’ when the user/bot accesses the file via port 443 (the secure https version of the website). Once in place, try loading the robots.txt file via both http and https. You should hopefully get something like this:

It’s worth noting however that blocking the bots from your https website won’t remove any cached https listings, it will merely prevent them from being crawled. Therefore this is a good preventative solution, but if you’re looking to remove duplicates from the listings then include the ‘Meta Robots Tag’ in Option 3 below:

Option 3 – Meta Robots Tag

If neither the Canonical Link Element or Robots.txt solution are viable, then there is one final method that you can deploy – The Meta Robots Tag. Much like with the .htaccess code in Option 2, PHP can also be used to detect if the content is being served via https. By adding the following code within the Head tag of your pages:

<?php

if (isset($_SERVER['HTTPS']) && strtolower($_SERVER['HTTPS']) == 'on') {

echo '<meta name="robots" content="noindex,follow" />'. "\n";

}

?>

The Meta Robots tag will be deployed on each page of the secure website only. The Meta Robots Tag is set to allow the page(s) to be crawled but not to be indexed/cached.

Conclusion

Whilst mild duplicate content issues can arise with site-wide SSL certificates, they can be very easily prevented and overcome by effectively using the Canonical Link Element.

Whether using an established framework like Magento or WordPress, or working with a bespoke build, The Canonical Link Element is the most effective and Google Recommended solution.

However, the other two options above can be equally effective although they make take longer to take effect if you’re fixing duplicate content issues rather than preventing them. Would love to hear anyone’s questions, comments or contributions to this post in the comments below.